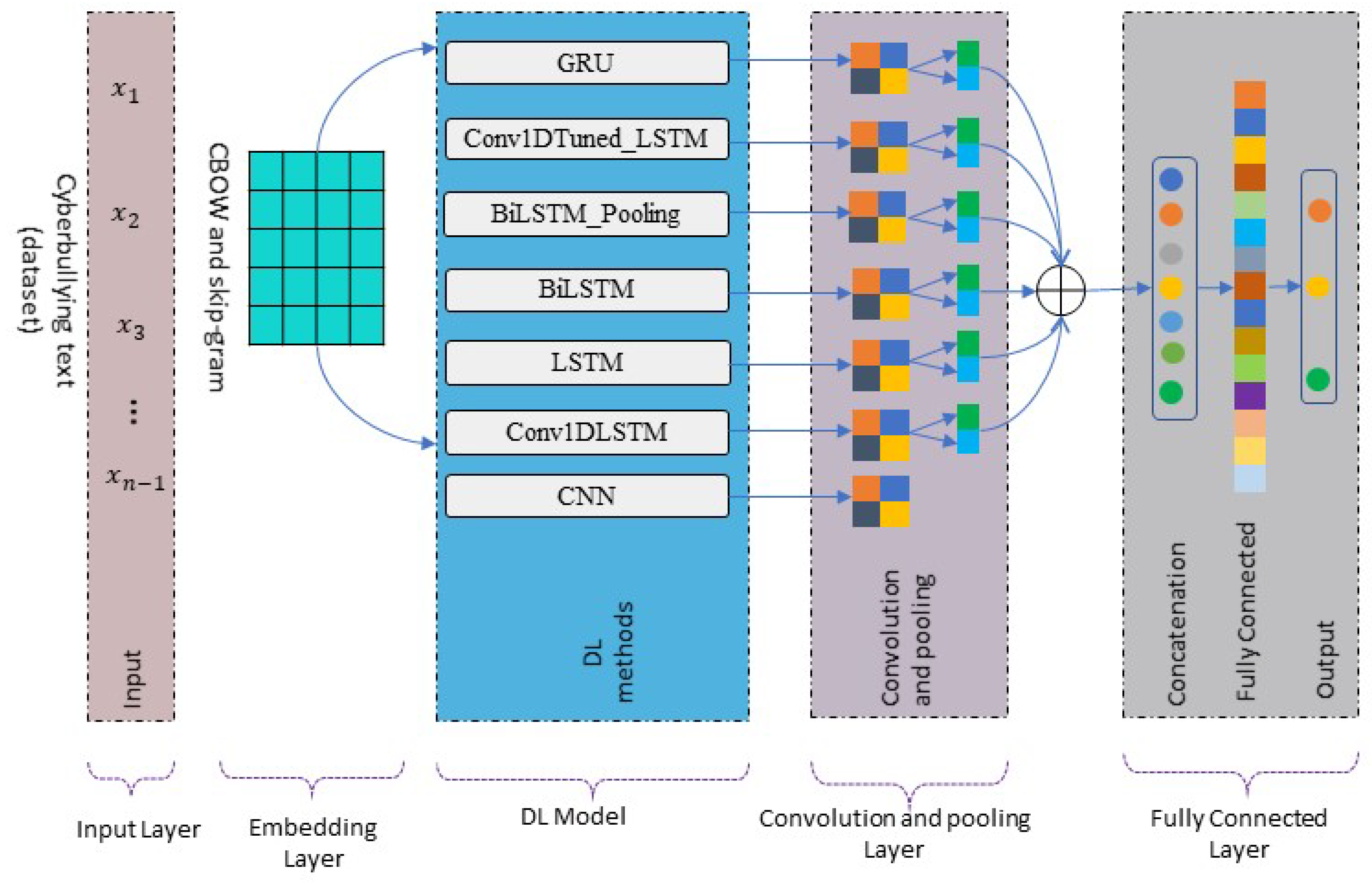

Mathematics | Free Full-Text | Cyberbullying Detection on Twitter Using Deep Learning-Based Attention Mechanisms and Continuous Bag of Words Feature Extraction

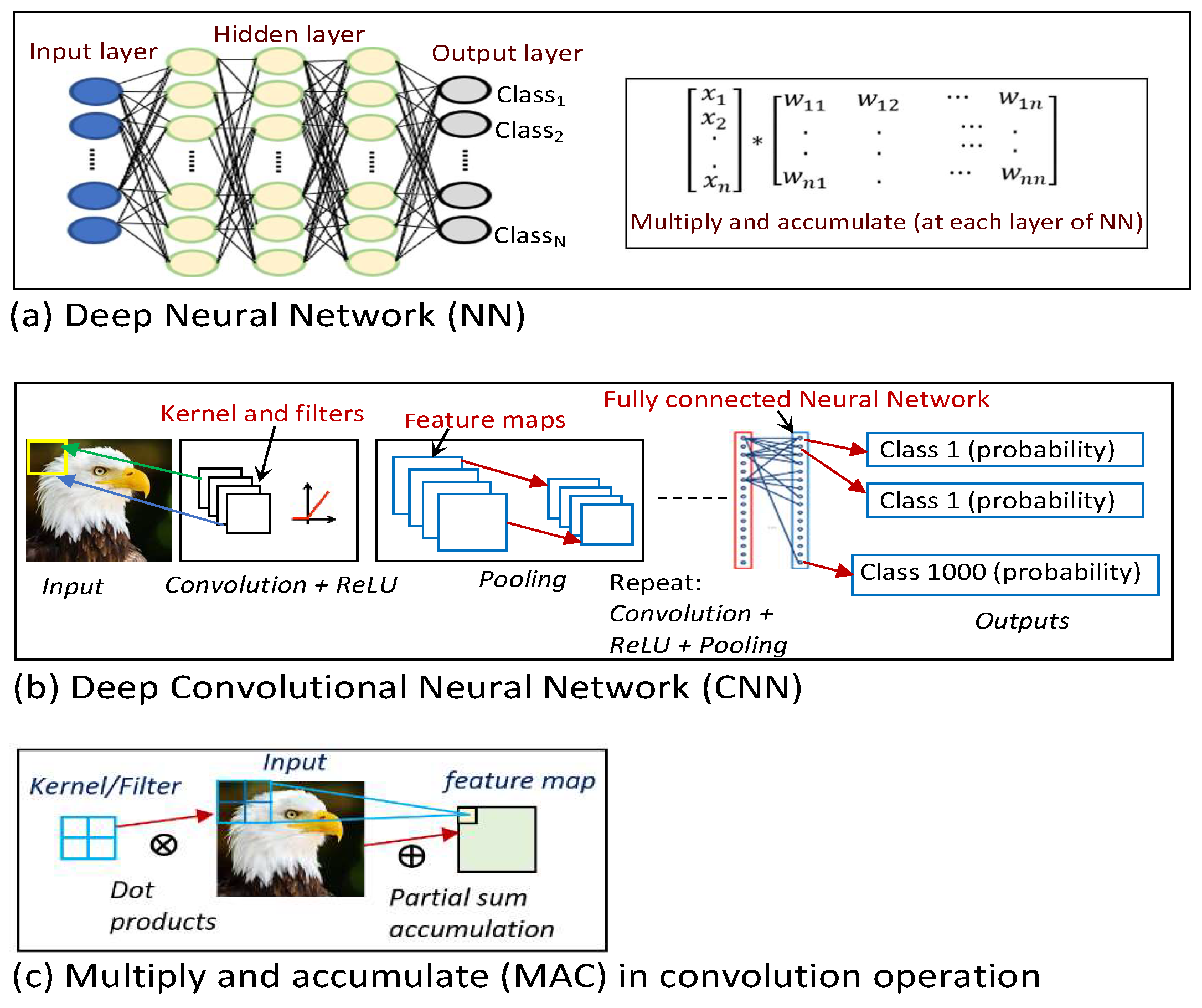

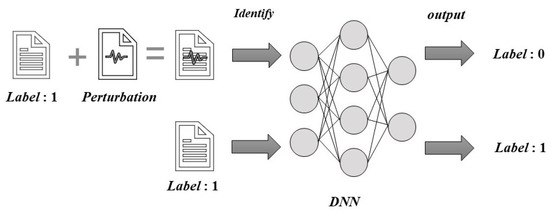

Information | Free Full-Text | Attacking Deep Learning AI Hardware with Universal Adversarial Perturbation

Machine Learning is Fun Part 8: How to Intentionally Trick Neural Networks | by Adam Geitgey | Medium

Diagram showing image classification of real images (left) and fooling... | Download Scientific Diagram

computer vision - How is it possible that deep neural networks are so easily fooled? - Artificial Intelligence Stack Exchange

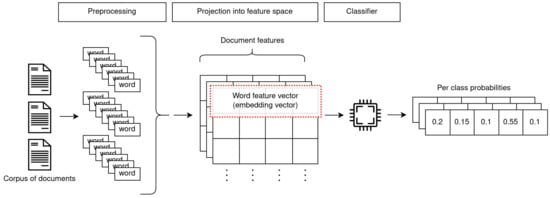

Multi-Class Text Classification with Extremely Small Data Set (Deep Learning!) | by Ruixuan Li | Medium

computer vision - How is it possible that deep neural networks are so easily fooled? - Artificial Intelligence Stack Exchange

Towards Faithful Explanations for Text Classification with Robustness Improvement and Explanation Guided Training - ACL Anthology

3 practical examples for tricking Neural Networks using GA and FGSM | Blog - Profil Software, Python Software House With Heart and Soul, Poland

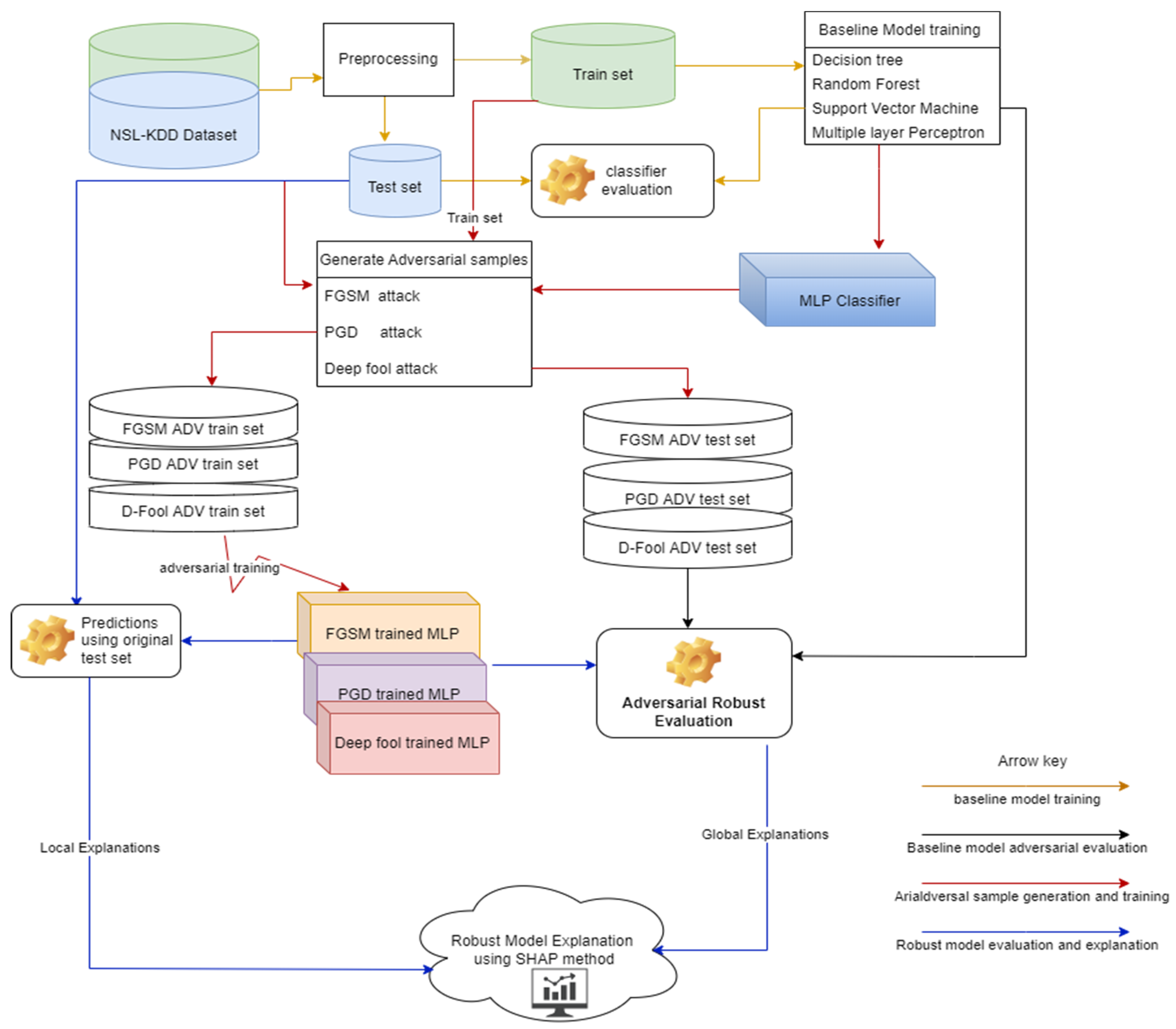

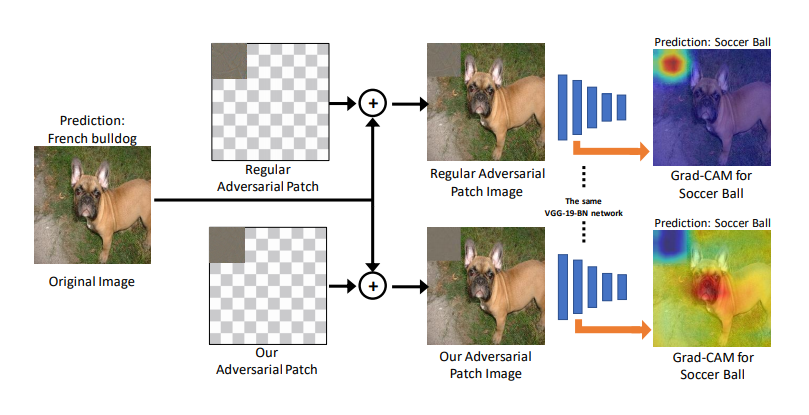

Applied Sciences | Free Full-Text | Adversarial Robust and Explainable Network Intrusion Detection Systems Based on Deep Learning

Text Classification: Unleashing the Power of Hugging Face — Part 1 | by Henrique Malta | Dec, 2023 | Medium

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/5-Figure9-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/2-Figure1-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/5-Table3-1.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/2-Table1-1.png)

![Why does changing a pixel break Deep Learning Image Classifiers [Breakdowns] Why does changing a pixel break Deep Learning Image Classifiers [Breakdowns]](https://substackcdn.com/image/fetch/f_auto,q_auto:good,fl_progressive:steep/https%3A%2F%2Fsubstack-post-media.s3.amazonaws.com%2Fpublic%2Fimages%2F0285e2d6-66d6-416c-a9c2-99d72fd686ae_425x600.png)

![PDF] Deep Text Classification Can be Fooled | Semantic Scholar PDF] Deep Text Classification Can be Fooled | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/ca062c5e48d230d0ac51da96d492f3cb4bf82b39/5-Table4-1.png)